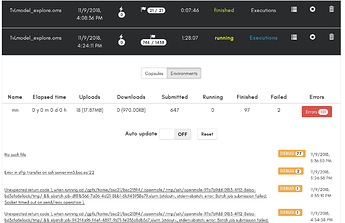

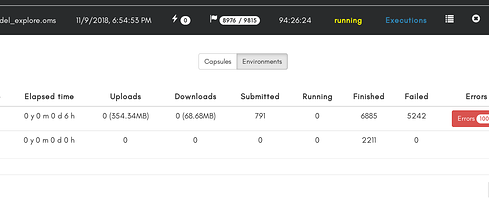

It looks like my jobs are running on my SLURM cluster, there are some tasks failing, some errors of various types but it looks like at the end it finished (cf the picture, where I ran a simple 3 set of paremeter that finished well, and now a much bigger one which is still running)

Problem is that things that open mole is launching one task by jobs for short periods of time. Which is not very good for a cluster (and I know on our computer task with more CPU * THREAD have priority).

I saw that this problem is mentioned in the doc here and I tied but he doesn’t went very well.

Is this

model on env by 100 hook ToStringHook()

indeed means that the task sent to env will be grouped 100 by 100 and is that supposed to work with SLURM?

On another hand, at my work they developed a tool called GREASY (cf:https://github.com/BSC-Support-Team/GREASY/) that I heavily used since I’m working here without any problem.

What it does is that it take a simple text file with every task in a line and then it split all the task on the node and thread allocated by slurm. If there are more tasks than CPU x THREAD then it will wait that one tasks finish to send a new one. If at the end some tasks haven’t finish or failed, it creates a .rst file with the list of those tasks. Thus you just need to run GREASY again with this .rst tasks file instead of the original one.

This way the programmer just need to send one job to SLURM that looks like this:

#!/bin/bash

#SBATCH -o job-c1c00a56-80d3-49d6-bd1f-360c36c8f965.out

#SBATCH -e job-c1c00a56-80d3-49d6-bd1f-360c36c8f965.err

#SBATCH --nodes=4

#SBATCH --cpus-per-task=48

#SBATCH --time=00:10:00

greasy listofile.txt

with list of file being:

run_00e01f85-183e-4c46-b5c6-69311fdb85a2.sh

run_0193b1af-ed6a-4a31-aa63-1bc0484d6071.sh

run_01c2e7ab-761b-4880-990a-45abae600c26.sh

run_01c5daa6-7e92-4448-883c-c984e6158eac.sh

run_01f9cbe5-bf87-4a4c-a0c6-757aa44e3c1e.sh

run_026d709a-fe3a-4a41-ba06-031a2507bce7.sh

run_037346e1-a71a-41db-a554-c9cc972e3031.sh

...

I don’t know much about scala and I am not sure how big it is to adapt your SLURM environment to use greasy. Do you think I could try and where should I start from?